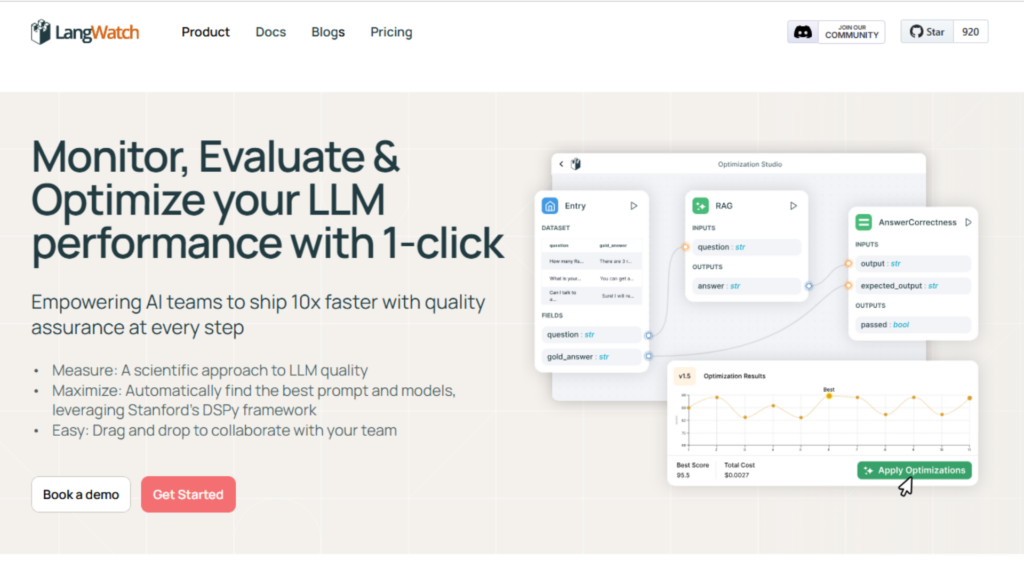

Managing the performance and accuracy of large language models (LLMs) requires a structured approach, especially when scaling AI applications.

LangWatch offers a practical way to monitor and evaluate LLM pipelines, helping developers track usage, analyze costs, and refine their models with real-time insights.

As someone working with AI systems, I understand the challenges of keeping models efficient while balancing cost and accuracy. LangWatch provides full tracing of all LLM messages.

With more than 40 evaluation metrics available, it supports automated assessments within CI/CD pipelines, ensuring models consistently meet quality standards.

This review will explain how LangWatch helps developers maintain reliable AI systems. It will cover key features, performance insights, and how the tool supports continuous improvement, making it a valuable addition to any LLM workflow.

What is LangWatch?

LangWatch is a monitoring and evaluation platform designed to manage large language model (LLM) applications.

It helps businesses and developers track usage, analyze performance, and manage costs effectively. With structured monitoring, LangWatch helps maintain accuracy and reliability in AI-driven tools.

Core Functionality and Purpose

LLMs require continuous assessment to function efficiently. LangWatch provides tools for tracking performance, identifying inefficiencies, and making data-driven improvements.

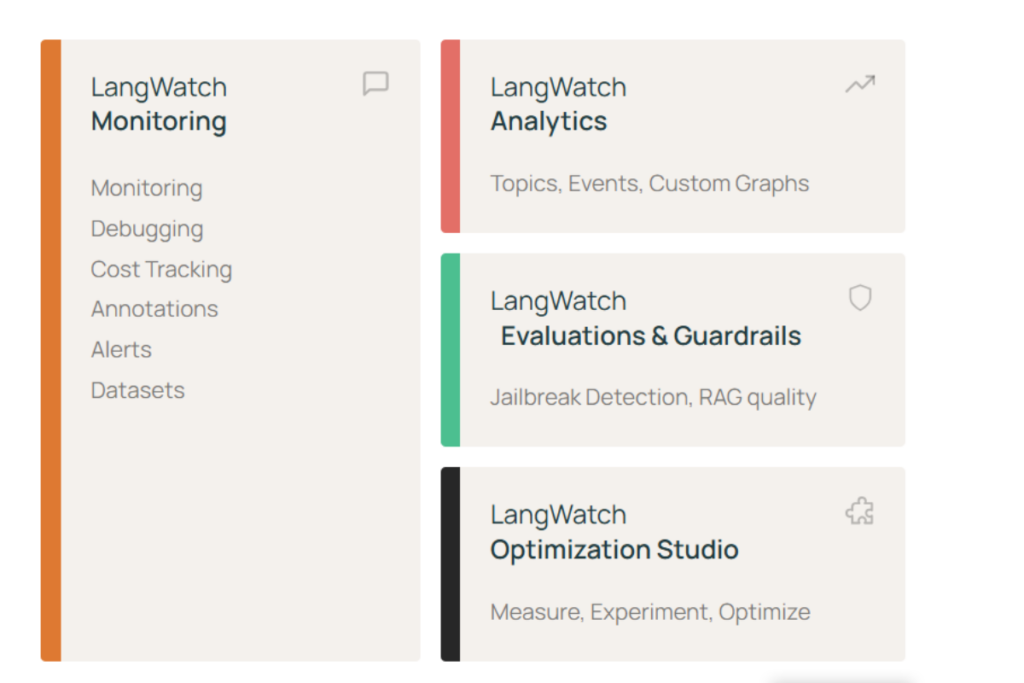

Key features include:

- Real-Time Monitoring – Logs every interaction to provide a complete record of AI responses.

- Evaluation Metrics – Offers over 40 automated assessments to measure accuracy, consistency, and relevance.

- Cost Tracking – Monitors API usage to help manage expenses.

- Debugging and Error Detection – Highlights issues that need attention.

- Seamless Integration – Works within CI/CD pipelines to assess models before deployment.

With these tools, businesses can maintain AI models that consistently meet their intended goals.

Who Uses LangWatch?

LangWatch is valuable for various industries and professionals working with AI applications.

- Tech Companies & AI Startups – Helps maintain performance and manage costs in AI-driven products.

- Enterprise AI Teams – Supports large-scale deployments that require consistent evaluation.

- Software Developers & Machine Learning Engineers – Provides tools for debugging, performance tracking, and cost analysis.

- Content and Customer Support Platforms – Improves AI-generated responses for better user interactions.

Its ability to track, measure, and refine AI systems makes it a practical choice for teams that rely on LLM applications.

Key Benefits

LangWatch stands out by providing structured tools for monitoring, evaluation, and cost control.

- Complete Performance Tracking – Offers visibility into real-world model behavior.

- Automated Assessments – Reduces manual work with standardized evaluation metrics.

- Cost Optimization – Helps prevent unnecessary spending on API usage.

- Reliable CI/CD Integration – Assesses models before deployment.

By using LangWatch, businesses can manage LLM applications effectively while maintaining accuracy and efficiency.

LangWatch Pros and Cons

LangWatch provides structured tools for monitoring and evaluating LLM applications. It offers several advantages for developers and businesses, though some areas may need further flexibility based on project requirements.

Pros

- Detailed Tracking – Logs all interactions, making it easier to review performance and identify issues.

- Automated Assessments – Includes more than 40 built-in metrics to evaluate accuracy, consistency, and relevance.

- Cost Analysis – Helps track API usage and spending, allowing teams to manage budgets effectively.

- Seamless CI/CD Integration – Works within development pipelines to check models before deployment.

- Adaptability – Supports different project sizes, making it useful for small teams and enterprises.

Cons

- Pricing Considerations – This may be expensive for smaller teams or those with limited budgets.

- Learning Curve – Some features take time to understand, particularly for those new to LLM monitoring.

- Customization Limits – While it includes many evaluation metrics, businesses with unique needs may require more flexibility.

LangWatch provides structured tools for managing AI models, though pricing and customization should be evaluated based on project needs.

LangWatch Expert Opinion & Deep Dive

As an AI developer, I have worked with multiple tools that monitor and optimize Large Language Model (LLM) applications. LangWatch brings a structured approach to this process, offering tracking, evaluation, and cost analysis within a single system.

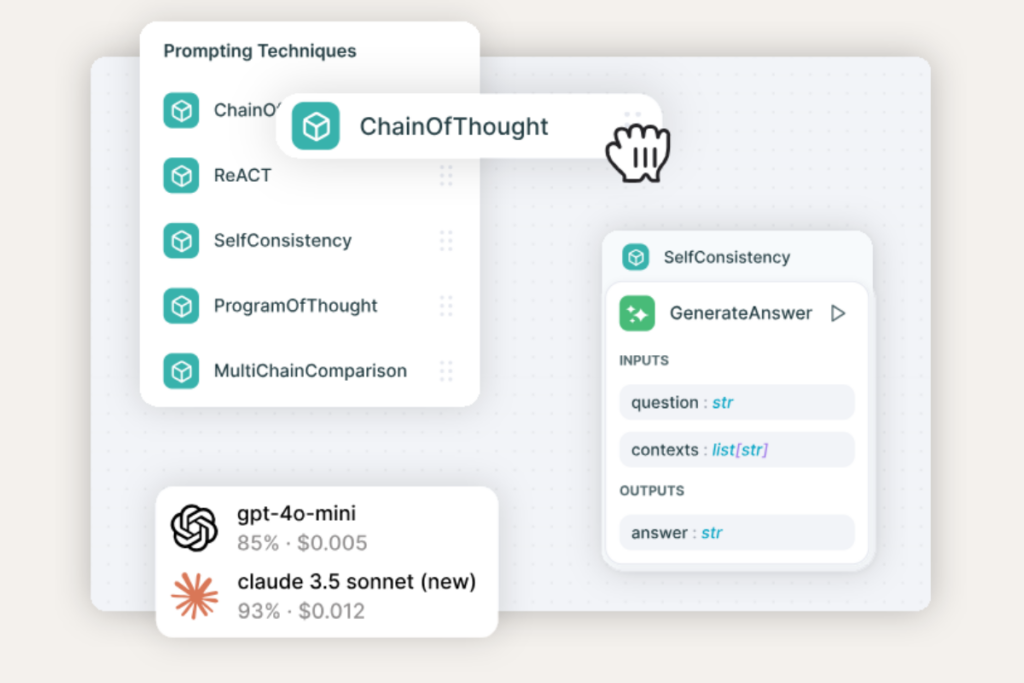

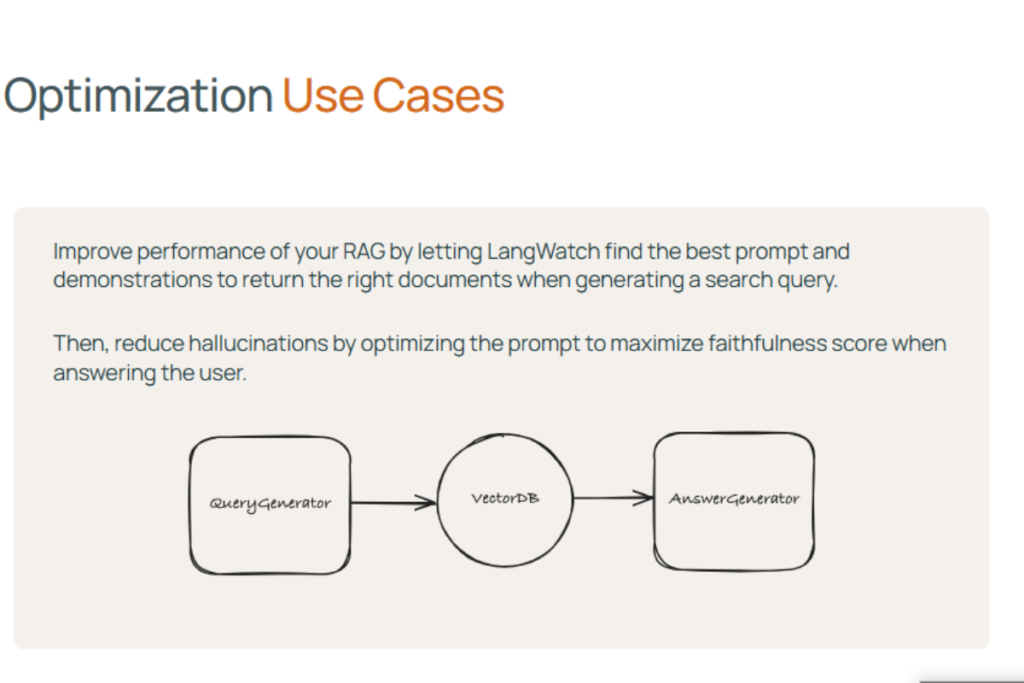

Its integration with Stanford’s DSPy framework helps automate the process of finding the best model configurations, improving efficiency in managing AI applications.

Standout Features

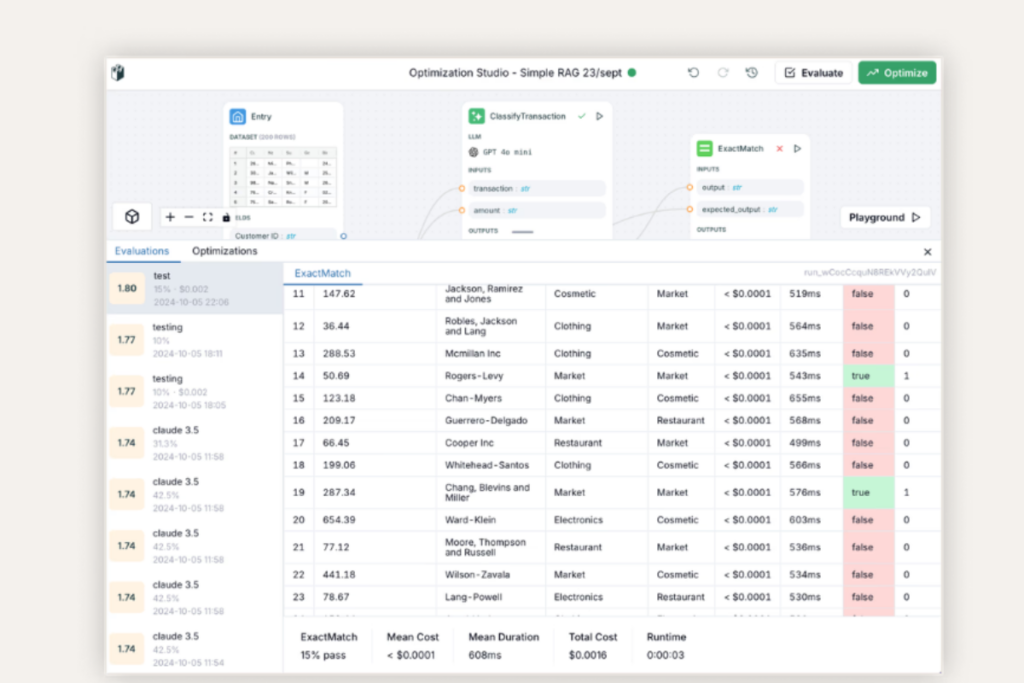

- Optimization Studio – Helps AI teams experiment with different model prompts and settings, speeding up workflow improvements.

- DSPy Integration – Uses advanced techniques to refine models, making adjustments based on data-driven results.

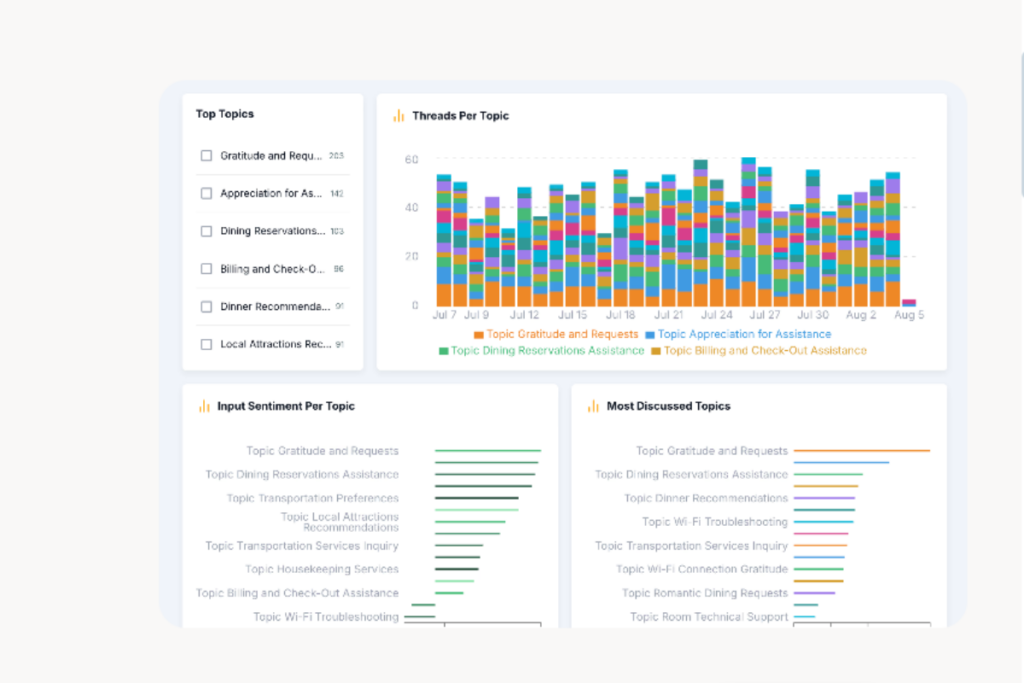

- Analytics Dashboard – Provides clear visual breakdowns of performance and usage, giving teams the information needed to make informed decisions.

Areas That May Need Adjustments

LangWatch has a strong feature set, but some aspects could be refined. Those unfamiliar with DSPy might take time to get accustomed to its functions.

Additionally, the pricing model may be more suitable for mid-sized and large teams, which could make adoption challenging for smaller businesses with tighter budgets.

Best Use Cases

LangWatch works well for businesses looking to improve their LLM applications with structured monitoring and performance analysis. A customer support platform using AI-powered chatbots, for example, can use LangWatch to track responses, ensuring accurate and relevant interactions.

If the chatbot starts generating off-topic replies, LangWatch helps identify patterns in the responses, allowing developers to refine the model before these issues affect user experience. AI-driven applications need continuous evaluation to function efficiently.

LangWatch stands out as a structured tool for monitoring, optimization, and cost tracking. Its ability to support collaboration between developers and subject matter experts makes it a strong choice for teams working on LLM projects.

LangWatch Key Features

LangWatch provides structured tools for monitoring and improving Large Language Model (LLM) applications. Its features help developers and businesses track usage, evaluate accuracy, and manage costs efficiently. Below is a breakdown of its core functions.

Real-Time Monitoring

LangWatch records every interaction with an LLM, allowing teams to track how the model responds in different scenarios. This helps identify patterns, ensuring outputs remain relevant and accurate.

By centralizing logs, errors can be detected early, reducing the need for extensive manual testing. This is particularly useful for AI-driven chatbots and automated content tools that require consistency in responses.

Automated Model Evaluations

With over 40 built-in evaluation metrics, LangWatch helps measure accuracy, coherence, and relevance. Manual assessment is time-consuming, but automated evaluations provide a faster way to maintain quality.

Running these checks continuously allows teams to refine AI models without extensive human oversight. In my experience, this feature helps keep model outputs reliable, making it easier to scale AI-based applications.

Cost Tracking and Optimization

Managing expenses is crucial for teams using LLMs, especially when working with API-based models. LangWatch provides detailed reports on resource usage, helping businesses track where spending is highest.

By identifying inefficiencies, teams can adjust settings to avoid unnecessary costs. For growing companies, having a clear view of expenses makes it easier to plan budgets and prevent unexpected charges.

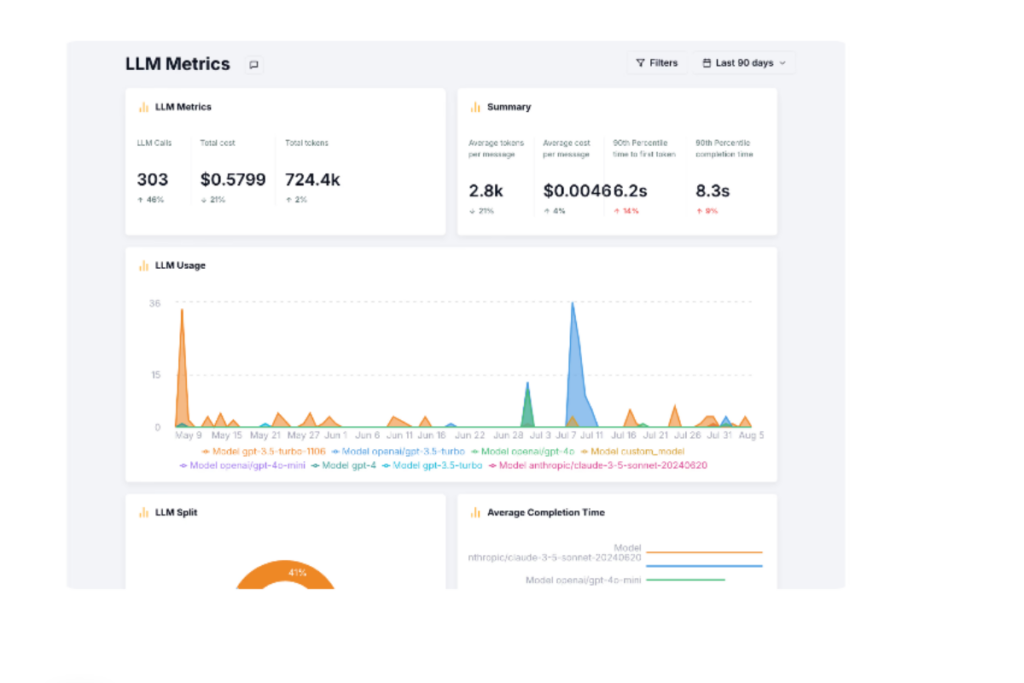

LLM Metrics

Troubleshooting AI responses can be time-consuming, especially when dealing with unpredictable outputs. LangWatch LLM metrics provides a way to track errors and identify recurring problems.

If a chatbot starts generating inaccurate or irrelevant replies, this tool highlights areas that need refinement. This helps developers make targeted adjustments rather than relying on trial and error. From my experience, this feature significantly speeds up the process of fine-tuning AI systems.

Seamless CI/CD Integration

For teams that update their AI models frequently, LangWatch connects directly to CI/CD pipelines. This ensures models are tested before deployment, reducing the risk of performance issues in production.

Developers can automate evaluations, making it easier to maintain consistency across updates. Businesses that depend on AI for customer interactions or operational processes benefit from this structured approach to testing.

Collaboration and Reporting

LangWatch provides shared dashboards and reporting tools that allow teams to track performance in one place.

Developers, data scientists, and business leaders can access insights without relying on separate systems. Custom reports help track progress over time, making it easier to refine AI strategies.

LangWatch Pricing

LangWatch provides different pricing plans to support businesses based on their team size and AI requirements. Below is a breakdown of its plans, including costs, features, and scalability options.

Pricing Plans

| Plan | Price | Best For | Key Features |

|---|---|---|---|

| Launch | €59/month | Small teams & startups | Optimization Studio (dspy-optimizers) Up to 10 workflows 10k traces 30-day retention 1 project 1 team member Slack support €19 per additional user €49 per 100k extra traces GDPR Compliance SSO: Github, Google |

| Accelerate | €499/month | Growing teams & complex AI implementations | Optimization Studio (dspy-optimizers) Up to 50 workflows 100k traces 60-day message retention 10 projects 10 team members Tech onboarding & Slack support €10 per additional user €45 per 100k extra traces Custom evaluations Dedicated Slack account Emergency phone support |

| Enterprise | Custom Pricing | Organizations needing advanced security, deployment, and support | Optimization Studio (dspy-optimizers) Custom message limits Custom projects Unlimited team members Custom message retention Self-hosted deployment Role-based access controls Analytics per API Bring your own models Dedicated Slack support 1:1 onboarding sessions Emergency phone support |

Considerations

- The Launch plan is cost-effective for small teams but has limits on storage, projects, and team size. Extra users and additional trace storage increase costs.

- The Accelerate plan is built for teams that require more flexibility and resources. This option works well for businesses that rely on AI systems at a larger scale.

- The Enterprise plan is fully customized, allowing organizations to adjust security, deployment, and scalability to match their needs.

LangWatch Use Cases

LangWatch supports businesses that require structured monitoring, performance evaluation, and cost control for AI applications.

AI-Powered Customer Support

Businesses using AI-driven chat systems can improve accuracy and efficiency with LangWatch’s tracking and error analysis. These tools help teams refine AI-generated responses, preventing inaccurate or irrelevant answers.

An e-commerce company handling thousands of customer inquiries daily can use LangWatch to track patterns, improve chatbot responses, and maintain quality.

Machine Learning Development and Testing

Development teams working on AI-powered applications often need structured ways to test and analyze performance. LangWatch automates evaluations and provides debugging tools, reducing the manual effort required for quality control.

This is particularly useful for businesses that develop AI models for automated decision-making, document processing, or predictive analytics.

Enterprise-Level AI Deployment

Organizations managing large AI models across multiple projects need greater control over security, compliance, and system reliability.

The Enterprise plan provides role-based access, self-hosted deployment, and deeper analytics. Financial institutions that rely on AI for fraud detection or risk assessment can use LangWatch to track performance while maintaining strict security requirements.

AI Content Generation and Moderation

Companies using AI-generated content in publishing, marketing, or user moderation can improve quality by using LangWatch’s automated evaluations and dataset management.

Teams working on AI-driven news generation, automated blog writing, or content moderation for online platforms can track and refine outputs more effectively.

LangWatch provides structured tools for teams that need greater oversight of AI applications. These tools help businesses refine performance, reduce costs, and maintain consistency.

LangWatch Support

LangWatch offers a structured system for monitoring and refining AI models. Ease of use and support are key factors in how effectively teams can integrate the platform into their workflows. The sections below cover the user experience and customer support options.

Ease of Use & Onboarding

LangWatch has an organized interface that presents key metrics, performance data, and cost analysis in a structured format. Those familiar with AI model monitoring will find the layout intuitive, while those new to LLM optimization may take time to adjust.

The onboarding process varies based on the selected plan:

- Launch Plan: Provides access to documentation and Slack support for self-guided setup.

- Accelerate Plan: Includes structured onboarding to assist teams with setting up workflows and understanding key features.

- Enterprise Plan: Offers personalized onboarding sessions with dedicated guidance.

While technical users may adapt quickly, those new to LLM tracking may need extra time to familiarize themselves with all available tools.

Customer Support Quality

LangWatch provides different support levels, depending on the plan:

- Slack Support (All Plans): Shared Slack channels allow users to submit questions and receive guidance from the LangWatch team.

- Dedicated Slack Support (Accelerate & Enterprise Plans): Private communication channels with faster response times.

- Emergency Phone Support (Enterprise Plan): Direct access to specialists for urgent technical issues.

- 1:1 Onboarding Sessions (Accelerate & Enterprise Plans): Personalized sessions for assistance with implementation and optimization.

Response times vary based on the plan, with priority given to enterprise users. Documentation is available for troubleshooting, and the combination of Slack channels and onboarding support allows teams to find solutions.

LangWatch is built for teams managing AI applications, with support services aligned to the complexity of these workflows. While the platform is structured for technical users, those new to LLM monitoring may need time to utilize all features fully.

LangWatch Integrations

LangWatch connects with a range of platforms, making it easier for teams to track, evaluate, and refine AI models without disrupting existing workflows. The platform is built to work with widely used tools across development, communication, and data management.

Supported Integrations

LangWatch works with several key systems to improve the monitoring and evaluation of AI applications. Some of its primary connections include:

- Large Language Model APIs—This API works with OpenAI, Anthropic, Hugging Face, and other AI providers, allowing direct tracking of responses and performance trends.

- Development and Deployment Tools – Integrates with GitHub, Google Cloud, and AWS, making it easier to automate testing and monitor models before updates go live.

- Communication and Collaboration Tools—It connects with Slack to send real-time alerts, keeping teams updated on any issues affecting AI-generated responses.

- Data and Analytics Platforms—These work with existing databases and visualization tools, helping teams organize and interpret performance insights.

These integrations help reduce manual tracking, allowing teams to manage AI workflows more efficiently.

Compatibility with Devices and Operating Systems

LangWatch runs as a cloud-based platform that is accessible from any device with an internet connection. The web-based interface eliminates the need for software installation and works across different operating systems.

- Windows, macOS, and Linux – Functions on all major operating systems through modern web browsers.

- Cloud-Based AI Infrastructure—This platform supports self-hosted and cloud-deployed AI models, allowing teams to choose the best setup for their needs.

- API Accessibility – Provides API endpoints for businesses looking to integrate LangWatch with internal tools or custom applications.

With broad compatibility and integration support, LangWatch fits into a variety of technical environments. It allows teams to track AI performance without making significant changes to their existing systems.

LangWatch FAQs

LangWatch helps teams manage and evaluate large language models. Below are answers to common questions about pricing, integrations, features, and support.

1. What pricing plans are available for LangWatch?

LangWatch offers three options: Launch (€59/month) for small teams, Accelerate (€499/month) for expanding businesses, and Enterprise (custom pricing) for organizations with advanced security and deployment needs.

2. Does LangWatch provide a free trial?

Yes, all plans allow users to test the platform before committing to a subscription.

3. What platforms and tools work with LangWatch?

LangWatch integrates with OpenAI, Anthropic, Hugging Face, GitHub, AWS, Google Cloud, Slack, and various data visualization platforms.

4. What support options are available?

Users receive Slack support across all plans, while higher tiers include dedicated assistance and emergency phone support.

5. Is LangWatch suitable for non-technical users?

LangWatch is structured for AI teams and developers. Those new to LLM monitoring may need time to understand the full range of features.

6. Can LangWatch be used with self-hosted AI models?

Yes, the Enterprise plan allows for self-hosted deployment, role-based access, and enhanced security for private AI environments.

LangWatch Alternatives

LangWatch competes with several tools that provide monitoring, evaluation, and optimization for Large Language Models (LLMs). Below is a structured comparison of LangWatch, Langfuse, Lamini, and LlamaIndex.

| Feature | LangWatch | Langfuse | Lamini | LlamaIndex |

|---|---|---|---|---|

| Core Functionality | Tracks, evaluates, and optimizes LLMs with automated assessments and cost monitoring. | Focuses on observability, logging, and debugging for LLM workflows. | Enterprise-level platform for building and fine-tuning custom LLMs. | Provides tools to connect structured and unstructured data to LLMs. |

| Best Fit | Teams needing structured monitoring, evaluation, and cost tracking for LLMs. | Developers looking for detailed logs and debugging tools. | Organizations developing proprietary LLM models. | Businesses needing data retrieval solutions for LLM-based applications. |

| Key Differentiator | Combines real-time monitoring, automated evaluations, and budget tracking. | Prioritizes logging and structured insights for troubleshooting. | Specializes in training and deploying custom enterprise LLMs. | Optimizes how LLMs access and utilize large datasets. |

| Pricing | Starts at €59/month for small teams, with enterprise pricing available. | Free and paid tiers based on usage. | Custom pricing based on enterprise requirements. | Costs depend on API usage and integrations. |

| Ideal Users | AI teams managing large-scale LLM applications. | Engineers and developers needing structured logs and debugging tools. | Organizations building proprietary LLM models. | Businesses optimizing data retrieval for LLM-driven systems. |

LangWatch is designed for teams that require tracking, evaluation, and cost management for LLMs. Langfuse is a better fit for developers focused on logging and debugging workflows.

Lamini supports organizations creating proprietary LLMs, while LlamaIndex helps businesses improve how AI applications retrieve and use data. Each tool has a different focus, making the choice dependent on specific workflow needs.

Summary of LangWatch

LangWatch was founded in 2023 and is based in Amsterdam, Netherlands. The platform focuses on monitoring and evaluating Large Language Model (LLM) applications, helping businesses analyze user interactions and improve AI-driven products.

In November 2023, LangWatch secured $106,000 in seed funding from Antler, a venture capital firm. The company was founded by Manouk Draisma and Rogerio Chaves, who have over 25 years of experience in the software industry, including roles at Booking.com and Lightspeed.

However, LangWatch provides tools for user analytics, debugging, and A/B testing, as well as supporting engineering and product teams of various sizes. The platform offers real-time insights, allowing businesses to refine their AI models and track performance over time.

With a focus on data-driven decision-making, LangWatch helps organizations maintain reliable AI applications. The company continues to develop solutions that support continuous optimization of AI-powered workflows.

Conclusion

LangWatch provides structured tools for monitoring, evaluating, and managing costs for teams working with Large Language Models (LLMs). Its key strengths include real-time tracking, automated assessments, and seamless integration with widely used AI and development platforms.

These features make it a strong option for AI teams, developers, and enterprises looking to improve LLM performance. Pricing could be a consideration for smaller teams that require advanced features.

We recommend LangWatch for businesses that need detailed tracking and performance optimization for AI-driven applications. Testing the free trial can help determine whether it aligns with specific project needs.

Have experience using LangWatch? Share your thoughts and insights to help others make informed decisions. Visit the LangWatch website to explore its features and see how it fits into your AI.